Resource Management in Kubernetes Cluster: Understanding Resources

Understanding Kubernetes resources and Quality of Service classes

Kubernetes is a container orchestration platform that allows you to manage and automate the deployment, scaling, and management of containerized applications. In this post, we will look into resource management in Kubernetes which involves allocating resources such as CPU, and memory to the containers running in a cluster

Understanding Resource

At the heart of a Kubernetes cluster is a resource abstraction called pods which groups containers together and manages their resources as a unit. Each pod is assigned a certain amount of CPU and memory resources, which are used by the containers running within the pod. Pods run on a node in the cluster and the node size in terms of memory and CPU in a cluster is definite. Only a certain number of pods can be run in a cluster and it is a duty of a Kubernetes cluster admin to assign the resources to the pod optimally to get the best utilization and at the same time ensure that there’s enough room to deal with increasing load and failures.

Kubernetes scheduler decides where to place a pod in the cluster, basically which node has a spare resource. In order to schedule pods effectively, the scheduler must know the resource requirements for each pod. This is where Kubernetes resource requests and limits kick in

Container Resource

Requests and Limits

Kubernetes resource configuration consists of two components: requests and limits.

request specifies the minimum amount of a request that a pod needs to run.

limit specifies the maximum amount of resources that a pod is allowed to use.

resources:

requests:

memory: "200Mi"

cpu: "400m"

limits:

memory: "400Mi"

cpu: "800m"

Setting resource requests and limits allows to accommodate spiky Pods in a cluster. Resource limits are a hard boundary for a pod. A pod that tries to use more than its allocated CPU limit which is a compressible resource will be throttled thus impacting performance and if it tries to use more than the allowed memory limit which is an incompressible resource, the pod gets terminated with an OOM error. The scheduler tries to schedule the pod in any other nodes in the cluster if there’s enough memory in the node. Kubernetes allows resources to be overcommitted which means the sum of all the resource limits of containers on a cluster(node) can exceed the total resources.

Despite all the efforts put upfront in defining the resource requirements, what happens if the containers are using more resources than allocated? Kubernetes uses Quality of Service (QoS) classes to make a decision about evicting pods in resource-crunch situations.

Quality of Service(QoS)

Kubernetes has 3 QoS classes Guaranteed, Burstable, or BestEffort defined. This cannot be assigned directly by you, rather Kubernetes does it for you on the basis of resources defined in the Pod manifest.

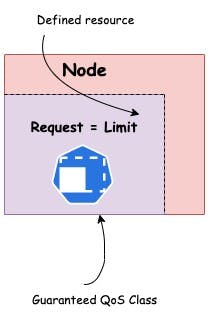

Guaranteed

When containers Limits(CPU and Memory) match requests(CPU and memory). This essentially means that the control plane kills this Pod if it exceeds the specified limits.

Note: If a Container specifies its own memory limit, but does not specify a memory request, Kubernetes automatically assigns a memory request that matches the limit. Similarly, if a Container specifies its own CPU limit, but does not specify a CPU request, Kubernetes automatically assigns a CPU request that matches the limit.

kubectl get po qos-pod-guaranteed -o yaml

spec:

containers:

...

resources:

limits:

cpu: 200m

memory: 400Mi

requests:

cpu: 200m

memory: 400Mi

...

status:

qosClass: Guaranteed

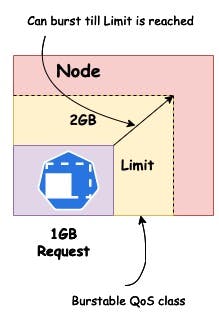

Burstable

When container Limits are higher than Requests. Kubernetes allows burstable pods up to their limit if capacity is available in the node. If the pod uses more resources than the request and there are not enough resources available in the node, the pod gets terminated.

kubectl get po qos-pod-burstable -o yaml

spec:

containers:

...

resources:

limits:

cpu: 200m

memory: 400Mi

requests:

cpu: 400m

memory: 800Mi

...

status:

qosClass: Burstable

BestEffort

When containers don’t specify any resource requests or limits. In this situation, pods are allowed to use whatever resource is available on the node but it will be the first one to be killed when the cluster needs to make room for higher(Burstable/Guaranteed) QoS pods.

What should you do?

In the first instance, it seems that Guaranteed is the best QoS class to set for the pods. But remember resource(memory/CPU) has some cost 💰. Let us try and understand how this can be approached. Considering we have a definite resource at hand and if we want to set requests and limits equal(Guaranteed QoS class) we have to either increase requests or lower the limits. In case of increasing the resource, we might be blocking too much of the resource unnecessarily and some other Pod which could have been scheduled in the node is not getting the resource. If we lower the Limit, the pod might throttle during the peak/spike hours. This seems like a similar territory when VMs are used.

Scheduling of a Pod is based on requests and not limits

The place where Kubernetes is different from the conventional resource allocation is when we use Burstable pods. Here, the amount of resources blocked by the pod is lower than the amount of resources the pod needs during surge hours.

Pods with QoS class as BestEffort gets the lowest priority. If the resource is not specified, the Kubernetes scheduler will place such pods on any of the nodes which have an available resource.

During the eviction, Kubelet selects the Pods to evict in order of QoS class. Pods classified as BestEffort will be first evicted followed by Burstable and finally Guaranteed.

Always specify resource requests and limits. This helps Kubernetes schedule and manage the pods properly. For critical pods or stateful sets, prefer Guaranteed class and Burstable for the less critical ones